I've been fascinated with artificial intelligence — that is, via large-language models (LLMs) — since they started being publicly available, in early 2023. I've been excited about getting AI to draw wemics for me. I've written screeds about that here, here, here, and here. I was a paying member for a while at NightCafe. I've used AI art here and there on my site, for header graphics, screed posts, and in my indie game, Labyrinths & Liontaurs.

Then I went to GenCon 2025, and I attended a couple game-design panel discussions. The near-universal consensus among attendees and panelists was that generative AI art is plagiarism. LLMs take the creative work of others and use it to make derivative content. That's a fact, one I can't argue with. And I can't see, after a lot of reflection, how it is okay to use other people's stuff without giving them credit or compensation. So before I release my own game, I plan to strip out all the AI art and replace it with art of my own.

But I'm not going to go retcon my past screeds on the topic (links above). That smacks of revisionism, and of rewriting history. I think it is useful to have a record of AI and my own AI journey. Likewise, I'm keeping my link to NightCafe live, though I've stopped paying for membership.

I've poked around the intertubes a bit and found many more gamers, game designers, and game companies embracing this position. Here are a few examples.

The editorial ethics and standards statement at Rascal, an indie games news site, is pretty fierce.

Fuck AI, Fuck Plagiarism: Rascals, by their very nature, will never plagiarize another's work and will never utilize generative artificial intelligence in any capacity in our reporting. Any journalist who commits plagiarism will be held to account for their actions - up to and including proper attribution, removal of their work from our site, and a termination of their work with Rascal. If you, dear reader, suspect any plagiarism or AI-generated content on Rascal: tell us immediately.

Rascal also does not review or much cover game companies that use AI-generated art.

Games Workshop, the Warhammer company, has declared that it will not use AI: "We do not allow AI-generated content or AI to be used in our design processes or its unauthorized use outside of GW." That's a quote I read at Gizmodo.

The Ennie Awards are refusing to consider games that use generative AI:

Generative AI remains a divisive issue, with many in the community viewing it as a threat to the creativity and originality that define the TTRPG industry. The prevailing sentiment is that AI-generated content, in any form, detracts from a product rather than enhancing it. .... the ENNIE Awards will no longer accept any products containing generative AI or created with the assistance of Large Language Models or similar technologies for visual, written, or edited content.

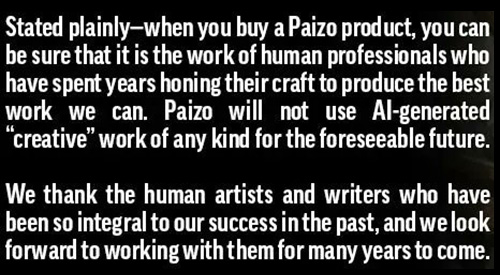

Paizo, publisher of Pathfinder, has also ruled out artificial intelligence. Here's the statement they released on Twitter (click through for the whole thing).

In a 2023 Substack essay, author lcamtuf points out the extent to which LLMs steal, especially in niche topics. "I think we don't grasp the vastness of the Internet and don't realize how often LLMs can rely on simply copying other people's work, with some made-up padding and style transfer tricks thrown in here and there." Granted, two years is forever in AI-land, but I suspect the plagiarism is still there. Masked, better, maybe.

So yes, I agree that using generative AI is unfair to the artists and other creators whose work is used, without permission, to train AIs. That said, I still think it is complicated. After all, if AI takes in a vast amount of creative inputs and then creates derivative output, well, what about me? Isn't that what I do? My whole life has been spent consuming art — visual, narrative, musical, more. Every "original" thing I've created over my long life has been influenced by the art I have consumed. Heck, not that I know rap music, but isn't the whole history of hip hop about sampling the works of other artists? All creative people are influenced by other artists. How is AI different? (Randy Resnick has an interesting take). It's complicated.

The issue of "inadvertent human plagiarism" is a real thing. There are examples of storytellers who "stole" plots from books they forgot they had ever read. In music, this sometimes lands musicians in court, like the famous George Harrison case over "My Sweet Lord."

In September 1976, the court found that Harrison had subconsciously copied "He's So Fine", since he admitted to having been aware of the Chiffons' recording.[142] Owen said in his conclusion to the proceedings: Did Harrison deliberately use the music of "He's So Fine"? I do not believe he did so deliberately. Nevertheless, it is clear that "My Sweet Lord" is the very same song as "He's So Fine" with different words, and Harrison had access to "He's So Fine". This is, under the law, infringement of copyright, and is no less so even though subconsciously accomplished.

The point is, I guess, that subconscious plagiarism IS plagiarism, and Harrison had to pay up. Here are four more examples. Similarly, AI plagiarism is plagiarism, too.

The point is, I guess, that subconscious plagiarism IS plagiarism, and Harrison had to pay up. Here are four more examples. Similarly, AI plagiarism is plagiarism, too.

But the number of creators who have managed to make something worthy with no or little influence from others (via outsider art or folk art) is very small. Aside from Grandma Moses, we all stand on the shoulders of giants, and of course, that is not an original thought.

But what about works that are in the public domain? Many works fall out of copyright early, and nothing stays copyrighted forever. Anything made by the government is in the public domain. And many many people are happy to just throw their work into the world, expecting no recompense, freely for anyone to use. Like Woody Guthrie said regarding his great "This Land Is Your Land" ... "Copyrighted in U.S., under Seal of Copyright # 154085, for a period of 28 years, and anybody caught singin' it without our permission, will be mighty good friends of ourn, cause we don't give a dern. Publish it. Write it. Sing it. Swing to it. Yodel it. We wrote it, that's all we wanted to do."

That's the spirit of my own game, L&L. I'm releasing it under Creative Commons and d20 OGL licenses because I want people to use it. No charge, on the open Internet.

I do think the technology behind LLMs is really interesting. Human-created technology has finally passed the Turing Test. LLMs are not artificial general intelligences, but they sure look like a step on the path. So what if we created an LLM that was trained exclusively and wholly with public domain content? NOT with anything copyrighted at all. Would it be ethical to use such an AI? What if we trained an LLM only with content that the owners had offered for use (and for which they had been compensated)? Would that LLM be ethical to use?

It is definitely wrong for LLMs to train on stolen copyrighted content, no question. And that means it is morally acceptable if the training does NOT use content to which anyone has a copyright or ownership claim. It should be possible to train an AI with only inputs from the public domain. I'm looking forward to the creation of such an LLM. And seeing if it can draw me a wemic.